Data Innovation Day: Big Data and the U.S. Census

The following post explores just one of the many talks at the Center for Data Innovation’s Data Innovation Day conference.

It’s hard to hold the attention of a large crowd during a meal, but Eric Newburger’s lunch keynote entitled “Why the Census? Big Data From the Enlightenment to Today” was a clear favorite for many attendees at Data Innovation Day. In the talk, Newburger explored the history of the U.S. Census Bureau — arguably one of the most data-rich resources in the United States.

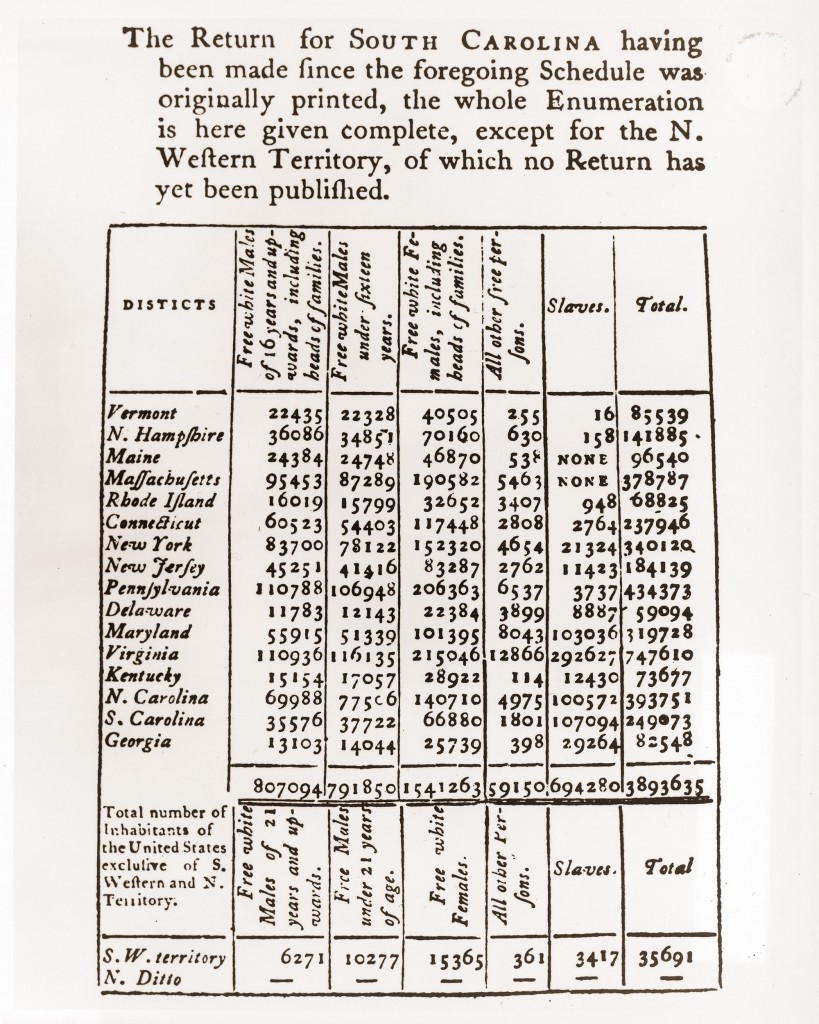

Image of the 1790 U.S. Census from census.gov

This treasure trove of information got its start with the very first census in 1790. That survey asked nine data items of each household, and with 558,000 households in the United States, Newburger calculates that the original census-takers had to wrangle 5 million data fields, or the equivalent of 20 megabytes of data. Even more impressive is the fact that this was all done by quill pen, not the computers we rely on today.

The daunting amount of data early census takers faced didn’t deter them from their goal: taking the measure of each and every U.S. citizen. Sixty years later, in 1850, the Bureau took on even more data by asking about individual people, not just households, which is the same method that we use today. These early census workers realized a truth that the Census Bureau still stands by: data is important. So important, in fact, that George Washington did the very first agricultural survey in the U.S. on his own dime, simply because he realized the necessity of taking stock of the population.

The U.S. Census Bureau is still a rich resource for data nerds. Back in the early years of the country, “official data” like that taken from the U.S. Census and tax records was the predominant source of knowledge. Thanks to the ubiquity of constantly data-collecting smartphones, social media, and more, we now have much more “other data” than we will ever have “official data.” Even the official data is immense, however. American FactFinder, the Census’ statistics website, had 370 billion cells as of 2013, with 11 billion more added every year. This collection of data is so immense, Newburger said, that “I promise you, we don’t know everything in there.”

He gave the audience a good idea of the sheer amount of data the U.S. Census is privy to when he summarized the Bureau’s mission, saying:

We count everyone, everywhere, since 1790.

Not too shabby! Newburger went on to point out that data sets like the “LED” (Local Employment Dynamics) make for great data visualizations, illustrating the point with a map of local D.C. jobs with a salary of $40,000 or more. This is just one of many of the rich data sets the Census Bureau offers, which tally everything from tourist statistics in New York to housing prices and the number and type of disabilities in local communities. The U.S. Census Bureau even has an API, making this the perfect playground for budding data scientists.

In fact, this was the takeaway of Newburger’s talk: if any “data nerds” are interested in exploring raw, unanalyzed data, the U.S. Census is truly the source for it. For a crowd that had gathered to celebrate the infinite uses of public, open datasets, this was music to our ears.

Want to get involved? For more on the Census Bureau, check out their public-facing website, developer site, and American FactFinder.